This morning I rebooted my test box running VMware ESXi 3.5 to complete the upgrade from Update 3 to Update 4. The hypervisor came back up, but no guests were running and when I popped open the VI Client it indicated that there were no datastores configured and it could not find any of the virtual machines I had in inventory. It saw the internal disks and that they were formatted VMFS, but would not allow me to do anything other than format them over again.

Normally this would have simply annoyed me since I would have lost my test VMs, but they don't take long to build so I'd have just formatted them and gone on with my day. Unfortunately within the last week we had temporarily moved a critical application's VM to this box and we had not properly reconfigured backup. I could restore from the week old backup, but there would be hell to pay.

Since the VMFS partitions were clearly visible I felt I had a chance, but I'm still new to ESX/ESXi so my first step was to flip over to my always running irssi session (if you use IRC and do not use screened irssi, go Google it now and enjoy) and ask for help in #shsc and #vmware. #shsc always has a few guys who work on large VMware installs idling, and of course #vmware is obvious. While waiting for any input from IRC, I went to Google for my next step. I knew ESXi has the capability to be accessed via SSH, but it's disabled by default, so I looked up how to turn it on. A few minutes later after bringing a monitor over to the machine and rebooting it I had SSH access and could go through system logs from the comfort of my laptop.

In /var/log/messages I found two entries referencing my SATA controller

which looked interesting:

May 5 14:34:35 vmkernel: 0:00:06:39.406 cpu0:3616)ALERT: LVM: 4482: vmhba000:0:0:3 may be snapshot: disabling access. See resignaturing section in SAN config guide. May 5 14:34:35 vmkernel: 0:00:06:39.408 cpu0:3616)ALERT: LVM: 4482: vmhba0:0:0:1 may be snapshot: disabling access. See resignaturing section in SAN config guide.

This information, after a quick trip to Google, led to VMware's SAN configuration guide which references similar issues occurring on SANs, so I tried enabling the resignaturing option and magically my datastores reappeared. After renaming them back to their original names and turning the resignaturing option back off I had all my data and was able to download the disk images and VMX files so I was safe in the event of a major problem.

At this point, I could see my VMs but the VI inventory was still convinced that they were on the "old drives", so after a bit more time on Google I discovered the Import feature within the datastore browser and I was able to bring the VMs back in and get them booting up.

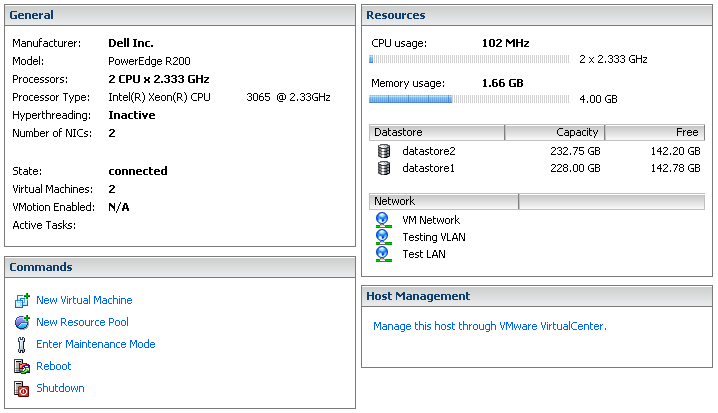

[caption id="attachment_139" align="aligncenter" width="431"

caption="Screenshot showing my datastores and two VMs

running"] [/caption]

[/caption]

After confirming that the VMs I really needed were booting and operational, I shut everything down to move the server back to its spot in my rack. Fortunately everything came right back up so the pressure was now off.

Now my concerns shifted. If this happened once, what's to stop it from happening again? I needed to figure out why it happened. Fortunately at nearly the exact moment I started thinking about this IRC came through for me. "jidar" in #shsc linked to this thread on VMware's forum with literally the exact same symptoms. A few posts down was a link to this page which again matched my experience exactly and says that U4 updated a number of SATA drivers including the one for the ICH9 controller in my PowerEdge and changed the way they appear to the hypervisor, which led to it not recognizing the drives for what they are.

Right now I'm moderately annoyed at an update that's not even enough to earn it a minor version number bump on a piece of software intended for enterprise use having a change with the potential to cause this, but on the other hand I don't expect anyone who really cares about reliability to be using SATA local storage. Ah well, I learned a bit about navigating around ESXi's internals.